Results of the 2024 Forecasting Contest

Congratulations to Martin H who won with a Brier Score of 0.177.

Updated due to an initial error on one question - which impacted quite a few things, including the winner.

The results of the 2024 Forecasting Contest are out!

Congratulations to Martin H who won - again! - with a Brier Score of 0.177.

We'll be looking below at the overall results, how each question resolved, the questions where people tended to fall down, how it compared to last year and how well my own predictions and the Wisdom of Crowds1 did. We’ll also look at if there were any differences between people who work in politics, media and current affairs and those who don’t.

A detailed section on Brier Scoring2 and the Wisdom of Crowds is contained in an appendix at the end.

The Results

85 people entered, of whom 83 submitted valid results3. I’ve listed below the 11 people who scored higher than the Wisdom of Crowds.

As said previously, many congratulations to Martin H! Astonishingly, Martin also won last year.

Congratulations are also due to Firestone - 6th place last year as well - who, along with Martin both demonstrated that they can beat the Wisdom of Crowds two years running4.

At this point I urge everyone in the top 10 to share this far and wide for bragging purposes.

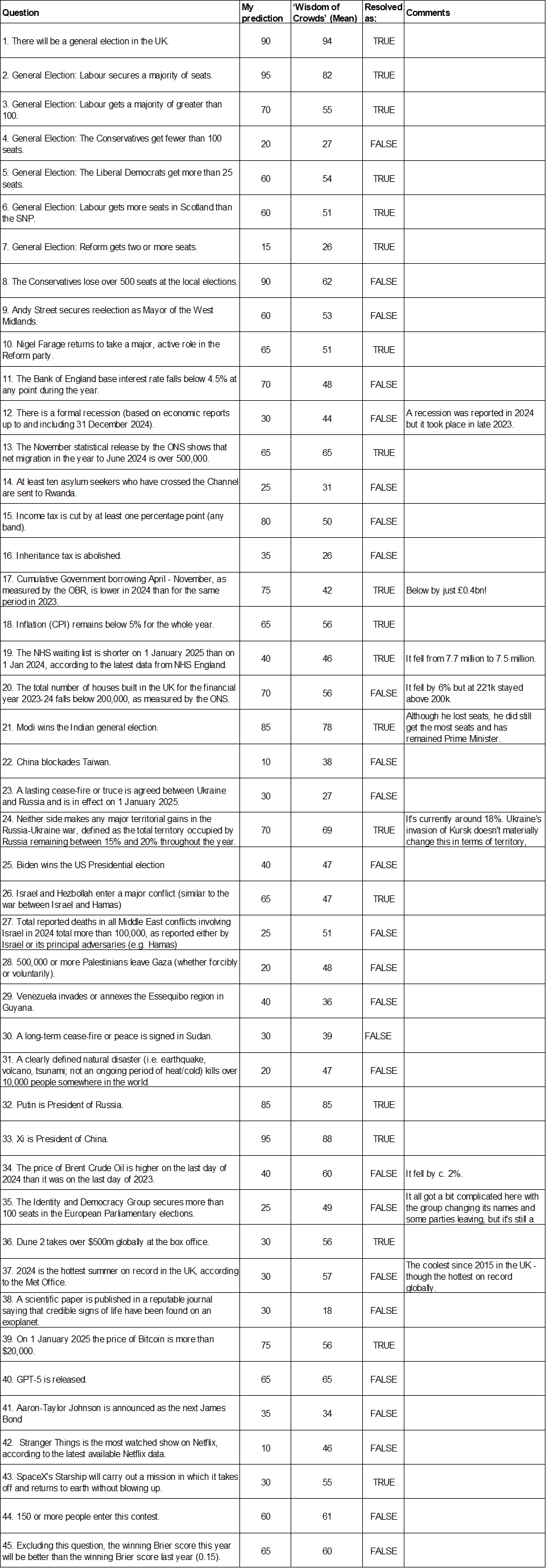

How did all the questions resolve?

Overall, the results were worse than last year. The winning score was lower5, the score required to get in the top 10 was lower, the mean score was lower6 and the Wisdom of Crowds score was lower. This year, only 13% of entries did better than the Wisdom of Crowds, compared to 16% compared to last year. I’m not sure if that’s because the questions were harder or the year was more turbulent - or perhaps both.

Interestingly, a higher proportion of people did better than chance: 69% of entries did better this year, compared to 55% last year.

34% of people considered that they worked politics, public policy, current affairs or media. They did noticeably better on average, with a mean Brier Score of 0.235 vs 0.254 for those who did not7 - though interestingly only made up 4 of the top 10 scores8. They also had a lower standard deviation.

70% of entrants who declared their sex were male and 30% female. Men tended to do better, with a mean Brier score of 0.239 vs 0.261 for women, though interestingly had a higher standard deviation.

So, how did I do?

I also did worse than last year - my Brier Score of 0.194 wouldn’t have got me in the top 10 last year! - and in ranking I also fell from 2nd to 7th - though overall I’m still very happy with that.

Like many people, I failed to predict Reform’s break-through at the General Election, though I was otherwise pretty happy with my General Election predictions. I did much worse in the mayoral and local elections, being absurdly overconfident about how many seats the Tories would lose9, and predicting Andy Street would cling on. I was also overconfident about income tax being cut (I assumed as part of a pre-election give-away - but Sunak stuck with NI, instead10).

More positively, I got almost all of the questions about the UK domestic economy and public services right, and with an appropriate degree of confidence (given how close some were) for both these and the one I got wrong (house-building). I also got all 15 of the international affairs questions directionally right - perhaps a sign I should increase my confidence on some of these. Less positively, I was wrong about Dune and SpaceX and too optimistic about GPT-5 and the number of people entering the contest11.

Overall, I feel a bit disappointed to have got worse compared to the year before - but equally I didn’t do as badly as I thought I had at times.

What about 2025?

If you enjoyed this year’s contest, I’ll be holding another one this year, opening on 13 January.

I hope you’ll enter again - and tell your friends, family and colleagues, so that this year we can have an even bigger field!

Appendix: Brier Scores and the Wisdom of Crowds

So what's a Brier score then, anyway?

If you're already confident you know what a Brier score is - or you're happy just knowing that lower numbers are better - feel free to skip to the next section: the Results.

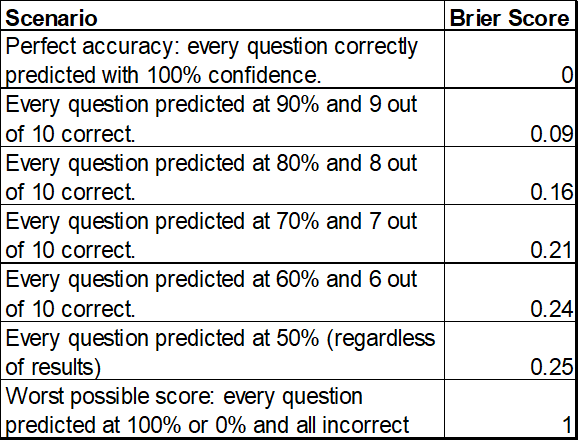

A Brier score is a way of scoring predictions that rewards both getting the prediction right and being accurate about how confident you were about that prediction. It has the advantage that it is hard to game: if you genuinely think the probability of something happening is 80%, you should guess 80%. Or, to put it another way, someone who gets 7 out of 10 things right will get a higher score if they put 70% for each question than if they put 80% or 60%.

The Brier score is calculated as the average mean square error across all the questions. For example, if you predicted something had an 80% chance of happening, if it does happen your score for that question will be 0.04 (i.e. (1 - 0.8)2) and if it doesn't happen your score will be 0.64 (i.e. 0.82). The score for every question is added up and then divided by the number of questions, giving a score between 0 and 1, where lower scores are better. You can therefore improve your Brier score both by getting more things right and by being more realistic ('better correlated') about how likely you are to be right, because you accrue a better score by getting something you were only weakly confident about wrong, than by getting something you were very confident about wrong.

Putting 50% for a probability guarantees a score for that question of 0.25. In this prediction contest I therefore awarded 0.25 for any question that participants skipped, as this was equivalent to them saying they thought it was as likely as not to happen. Someone could ensure they got no higher than 0.25 overall by putting 50% for every question; in theory, therefore, no-one should get a higher Brier score than 0.25 - though in practice, that’s not the case.

I find Brier scores slightly counter-intuitive, so below is set out some hypothetical scenarios and the associated scores:

What about the Wisdom of Crowds?

There's a theory which says that if you average predictions, the average will be better than most individual sets of predictions. Different people have different information; positive and negative random errors cancel out, and so on. The Wisdom of Crowds score is found by taking the mean of everyone’s forecast for each question and then scoring those means as if they were an entry in its own right.

When we look at the Wisdom of Crowds score, it had a Brier score of 0.204 - or better than the 8th best scoring individual5. Which is pretty good! It means that averaging the scores would have beaten over 90% of contestants - and you are much better off trusting the average than any random individual (including some of the people who work in this field!). On the other hand, there are a few people who did significantly better - including some people who’ve done so two years runnning - which also suggests that we can do better in predicting things than just averaging our guesses.

I.e. the average of all predictions - see Appendix for more details.

Very quick summary: lower scores are better, getting 0 means getting every question right with 100% confidence, guessing 50:50 for every question would give you 0.25. Brier scores reward contestants not just for being right, but for accurately assessing how confident they are that an answer is right - while over-confident wrong answers are penalised.

The remaining two, who shall not be named, submitted ‘yes’, ‘no’ and ‘maybe’ type answers rather than percentage chances.

I’m also really pleased to have done this myself - though I’m conscious I have an advantage in picking the questions.

It would have been 5th last year.

0.249 vs 0.239

This means the average score of those not working in current affairs etc was worse than would be expected due to chance.

Though only one of the bottom 10 - so perhaps this is less a predictor of excelling, than of being able to avoid too many major errors.

They did badly, but not quite ‘losing over 500 seats’ badly - and yet I gave it a 90% chance.

Which I actually agree was the better option policy-wise, even if not politically.

Turns out contest entries increase much more slowly than total number of subscribers.

May I have my answers and score please? I have searched my emails but I cannot see a record of my answers. Sorry! Something along the lines of David Price for the alias hopefully!

You should probably be using the median rather than the mean for the crowd.