Results of the 2023 Prediction Contest

The results of the 2023 Prediction Contest - congratulations to Martin H, who won with a Brier Score of 0.150.

The results of the 2023 Prediction Contest are out!

Congratulations to Martin H, who won the contest with a Brier Score of 0.150.

We'll be looking below at the overall results, the Hall of Fame Top 10 scorers, the questions where people tended to fall down and how well correlated my own results were (answer: not terrible, but could definitely be better). We'll also see how the Wisdom of Crowds performed, and whether averaging many people's predictions can give you a better sense of the future.

Following the success of last year's Prediction Contest, I'll be running another for 2024 which will launch on 11 January and be open for about a week. I hope everyone who took part this year will want to take part again - and to tell their friends, so we can get the average score even better!

If you want to make sure you hear about the launch of next year's contest, you can sign up to receive an update every time I post by entering your email address into the subscription form below. You can also help by sharing what I write (I rely on word of mouth for my audience).

So, what actually happened?

The questions resolved as follows:

We can see that some of the major errors (both on my part, and the average predictions) involved Boris Johnson remaining as an MP - for my part, I didn't put enough weight on the fact that he might voluntarily resign - and on De Santis beating Trump. Based on how confortably the threshold was exceeded, people could have been more confident that the Conservatives would be defeated badly at the local elections and that inflation would fall below 5% (though equally, these sorts of events are inherently uncertain so perhaps a degree of caution was warranted).

I personally was far too overconfident that Government borrowing would fall. I thought the energy support scheme being phased out would make more difference, and completely neglected the impact of higher interest rates on interest payments. I played the odds on the natural disaster (a disaster of this scale only occurs once every 6-7 years) but this year, unfortunately, was one of the bad years, with the tragic earthquake in Turkey and Syria that killed more than 50,000.

So what's a Brier score then, anyway?

If you're already confident you know what a Brier score is - or you're happy just knowing that lower numbers are better - feel free to skip to the next section: the Results.

A Brier score is a way of scoring predictions that rewards both getting the prediction right and being accurate about how confident you were about that prediction. It has the advantage that it is hard to game: if you genuinely think the probability of something happening is 80%, you should guess 80%. Or, to put it another way, someone who gets 7 out of 10 things right will get a higher score if they put 70% for each question than if they put 80% or 60%.

The Brier score is calculated as the average mean square error across all the questions. For example, if you predicted something had an 80% chance of happening, if it does happen your score for that question will be 0.04 (i.e. (1 - 0.8)2) and if it doesn't happen your score will be 0.64 (i.e. 0.82). The score for every question is added up and then divided by the number of questions, giving a score between 0 and 1, where lower scores are better. You can therefore improve your Brier score both by getting more things right and by being more realistic ('better correlated') about how likely you are to be right, because you accrue a better score by getting something you were only weakly confident about wrong, than by getting something you were very confident about wrong.

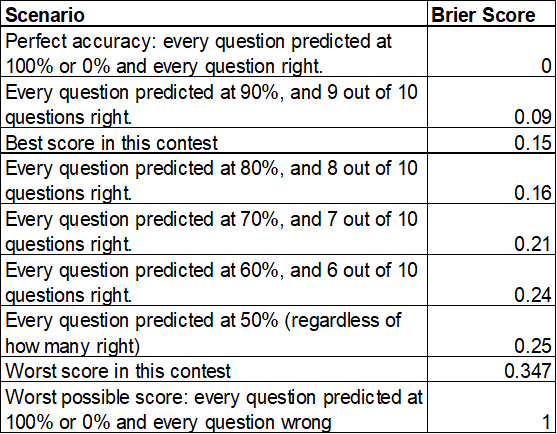

Putting 50% for a probability guarantees a score for that question of 0.25. In this prediction contest I therefore awarded 0.25 for any question that participants skipped, as this was equivalent to them saying they thought it was as likely as not to happen. Someone could ensure they got no higher than 0.25 overall by putting 50% for every question; in theory, therefore, no-one should get a higher Brier score than 0.25 - though in practice, we will see that that is not the case...

I find Brier scores slightly counter-intuitive, so below is set out some hypothetical scenarios and the associated scores:

The Results

A total of 64 people entered the contest, with 55 valid entries1.

The top ten results were as follows2:

Congratulations once again to Martin H for a very impressive score - equivalent to getting more than 8 out of 10 questions right with better than 80% confidence! I suspect my own high placing reflects the considerable advantage that comes with being the question setter3. And congratulations to all in the top ten, all of whom have scores equivalent to predicting at 75% with perfect correlation.

Overall, the scores ranges from 0.150 to 0.347 at the other end4. In total, only 30 out of 55 people got scores less than 0.25, with 25 out of 55 - nearly half - getting a score worse than if they'd just put 50% for every question (or left every question blank). Turns out predicting stuff is hard! When looking at the scores, it's not so much that people who scored poorly got more things wrong (though they did), but rather that, in general, they were too overconfident - getting multiple things wrong that they had assigned 80% or 90% probabilities too. That's a feature, rather than a bug: the contest recognises that the future is uncertain and to do well one has to to be good at quantifying the probability that something will happen - and the Brier score ruthlessly penalises over confidence.

Was being a professional an advantage?

The majority of the questions were about politics and current affairs. I was curious to know if people who worked in these fields would do better, so asked people to self-identify as to whether or not they considered that thie 'job involves working in politics, public policy, media or current affairs (broadly defined).' Just over 1/3 of people indicated that it did.

The results were far from absolute, with the winner, Martin H, not working in the field and the worst score being someone who did work in the field. However, despite only constituting 1/3 of entrants, people working in politics/public policy/media made up 6 out of the top 10 scores and, when averaging their Brier scores, got an average Brier score 0.025 points lower, or just under half a standard deviation lower, than the average of those who did not work in the field - a small but fairly clear advantage. So there accrue some benefits to being a professional - which is reassuring, I guess.

What about the Wisdom of Crowds?

There's a theory which says that if you average predictions, the average will be better than most individual sets of predictions. Different people have different information; positive and negative random errors cancel out, and so on. How did that turn out here?

When we look at the Wisdom of Crowds score (see table above), it had a Brier score of 0.189 - or equal to the 10th best scoring individual5. Which is pretty good! It means that averaging the scores would have beaten over 80% of contestants - and you are much better off trusting the average than any random individual (including some of the people who work in this field!). On the other hand, there are a few people who did significantly better - including the winner (and me!) - which also suggests that we can do better in predicting things than just averaging otu guesses.

How well correlated was I?

One of the principal goals of running and taking part in these sorts of contests is to improve one's own accuracy and correlation. Not simply to get more things right6 but to be better at quantifying one's own uncertainty. If I predict ten things have a 70% chance of happening, ideally seven of them should happen and three shouldn't.

So how did I do? The answer: not too badly, but with significant room for improvement too7:

NB: I did not have enough predictions at certain values to make a decent graph, so predictions are grouped in buckets of 90-95%, 80-85% and so on.

I did very well at the top end, making 12 predictions at 90% or 95%, all of which came true. When I thought something was very likely to happen, it did.

However, I was very over confident at the 80% and 85% level, getting three out of the eight predictions I made at this probability wrong. This included the Boris Johnson and Small Boats questions. I was then reasonably well correlated at 70%-75%, before becoming under-confidence at 60% and 65%. Not terrible, but plenty of room to improve.

How do I find out my personal result?

I appreciate most people will not remember what they predicted a year ago! For the coming year's contest, I will attempt to set up the form so that (if people wish) they can be sent a copy of their predictions. For now, however, if you would like to know your Brier Score, your rank or a copy of your predictions, please contact me and I will send it to you9.

If you found this post interesting, you can help by sharing what I write (I rely on word of mouth for my audience) - or recommending some of the posts above. You can also ensure you never miss a post, by entering your email address into the subscription form below.

And stand by to take and share Prediction Contest 2024 in a couple of weeks!

I note that while the overall outcome was good for me, my calibration seems terrible.

Of the 4 things I predicted with 95% confidence, only 3 happened.

Of the 4 things I predicted with 90% confidence, only 3 happened.

Of the 4 things I predicted with 85% confidence, all happened.

Of the 9 things I predicted with 80% confidence, all happened.

Of the 4 things I predicted with 70% confidence, 3 happened (which seems well calibrated).

Of the 2 things I predicted with 50% confidence, 1 happened (which is well calibrated but meaningless).

I think I default to saying 80% for things I think very likely to happen (hence the large cluster of predictions at that level), but in fact such things happen more than 80% of the time.

Conversely, when I'm very confident that something will happen, I'm sometimes over confident. Of course this is only two bad predictions (Boris Johnson continuing as an MP and a British person winning the Nobel prize). In both those cases I over-indexed on the base rate, so I'm not sure whether a general conclusion as to my confidence level can be made.