Results of the 2025 Forecasting Contest

374 valid entries, 27 beating the wisdom of crowds - and one winner...

The results of the 2025 Forecasting Contest are out!

This year there were a record 374 valid entries. Of these:

238 (64%) did better than would be expected by random chance.

27 (7%) did better than the wisdom of crowds.1

We’ll look below at the overall results, at some of the detailed breakdowns by sex and profession, and at how my own forecasting went this year. The full results for everyone who did better than random chance are also included at the end of the post.

But first, a massive congratulations to this year’s winner, ‘Firestone’, real name Christopher Flint, a former missionary and first class physics graduate from Oxford, who currently works as an Operations Manager at Transport for London.

Chris not only decisively beat the wisdom of crowds, he outdid more than 100 entrants who work in public policy / politics / journalism / similar fields for a living. Given he also beat the wisdom of crowds in 2024 and 2023, coming 6th in both previous years,2 that would suggest his forecasting success is no fluke!

Next year’s contest will open this Sunday 4th January and be open for two weeks.

To be notified when it opens, subscribe here (free).

I’d encourage everyone who took part this year to have another go, regardless of how well you did, suggest that everyone else to give it a shot - and would ask all to share widely once out, to get the pool of entries as big as possible!

How did each question resolve?

*The Politico Poll of Polls has done some odd things a couple of times this year and is only up to date as of 17 December. It’s the one I said we’d go by, so we’re going by it; however, for what it’s worth, I think the averages on electionmaps.uk are closer to what the polls actually say - fortunately, it doesn’t make any difference to how these three questions resolve.

Overall, the results this year were considerably better than last year: the wisdom of crowds was better by 0.27 and the winning score beat last year’s by 0.32. A smaller percentage of entries (7% vs 13%) beat the wisdom of crowds: the higher number of entrants should make it more accurate, and it appears it has.3

The question that, on average, people did worst on (as measured by total average contribution to final Brier score) was Q33, on the Canadian election, followed by Q12 on immigration numbers and Q16 on the Assisted Dying Bill. The question that, on average, people did best on was Q5, Ed Davey remaining as Lib Dem leader, followed by Q1, Keir Starmer remaining as Prime Minister and Q23, Trump not being assassinated.

The overall mean Brier score was 0.242; the median was 0.235.

29% of people said they worked in ‘politics, public policy, media or current affairs (broadly defined)’. These people had a slightly better mean Brier score (0.237, vs 0.244 for those who didn’t) but interestingly were under-represented in the top 27 who beat the wisdom of crowds, making up only 6, or 22%, of that number. First, second and third place were taken by people who didn’t work in these fields.

82% of entries were male and 16% female, with 2% preferring not to say. Men had only a slightly higher mean Brier score (0.240 vs 0.252 for women) but dominated those who beat the wisdom of crowds, making up 26 out of the top 27. The highest scoring woman came 17th and the second highest 33rd.4 The lowest 13 scores, however, were also all men.

Reader Chris last year asked Deepseek’s AI model Perplexity to answer the questions, first explaining to it in some detail how to make good predictions and how Brier scoring was (you can read his prompts, and the AI’s predictions and reasoning, here). It got a score of 0.222, which would have put it 136th: better than the median human, but still a long way off the top performers!

How about my own forecasting?

I felt my own forecasting was worse than usual this year, and was relieved I managed to scrape in above the wisdom of crowds in 21st place, with a score of 0.180.5 I’m pleased to have beaten the wisdom of crowds three years in a row, but definitely feel I could have done better.

In particular, I did poorly on the local elections, underestimating the level of swing.6 I also underestimated just how inexplicably long it would take this Government - despite its massive majority - to get legislation through Parliament, which lost me points on three questions. I also made a silly error on interest rates, where I’m really not sure what I was thinking at the time.

More positively, I got the set of domestic policy focused questions (on immigration, NHS waiting lists and house building right) and was suitably cautious about predicting who would be where in the polls, in a year of massive fluctuation. I did well on the international section, including correctly calling that there would be a cease-fire in Gaza but not in Ukraine, although, like many people, I called the Canadian election incorrectly.7

Overall, not bad, could have been better, and in particular I’m going to work harder on the local elections section next year.

The Full Results

I’m publishing here the full rankings of everyone who did better than chance, using the names or pseudonyms they indicated they would be happy to see appear on the internet. If you don’t see your alias here, then sadly, this year you did not do better than chance8 - though I hope you still had fun and will enter again in 2026!

1. Firestone: 0.145

2. James Hannam: 0.147

3. James B: 0.157

4. Andy Morris: 0.161

5. Principal P: 0.166

6. Peter Bennet: 0.167

7. Luke W: 0.169

8. JoeS: 0.170

9. Alex W: 0.171

10. Mike Smith: 0.171

11. Jonathan Portes: 0.172

12. Andy : 0.172

13. QuinL: 0.174

14. Barney Rubble: 0.174

15. Anon: 0.175

16. Robert Emery: 0.176

17. RC: 0.176

18. Ed: 0.177

19. Friso: 0.178

20. RMY: 0.179

21. Edrith: 0.180

22. Martin H: 0.181

23. Anon: 0.181

24. Anon: 0.181

25. Laurie U: 0.183

26. Anon: 0.185

27. Nick O’Connor: 0.185

[Wisdom of Crowds: 0.1878]

28. Eric Rees: 0.188

29. Anon: 0.188

30. Noble: 0.189

31. Westmorland 9: 0.189

32. JGWHerts: 0.189

33. Venetia: 0.189

34. Richard Vadon: 0.189

35. Will Bickford Smith: 0.190

36. Anon: 0.191

37. JO’L: 0.192

38. Andrew B: 0.192

39. Andy Hewitt: 0.193

40. rogerthomasyork: 0.193

41. Claretta: 0.194

42. MIchael Cluff: 0.194

43. Anon: 0.195

44. Rory: 0.195

45. Johnny Rich: 0.196

46. Anon: 0.197

47. Satis: 0.197

48. dodiscimus: 0.197

49. Anon: 0.197

50. Paul Jenkins: 0.198

51. HeatherS : 0.198

52. euanjs: 0.198

53. Mark Cannon: 0.198

54. Richard Arnold: 0.200

55. Bruce G: 0.200

56. Anon: 0.200

57. Alistair B: 0.200

58. MadDad38: 0.201

59. antifrank: 0.202

60. Anon: 0.202

61. Lesley Boorman: 0.202

62. Edward R: 0.203

63. Jake B: 0.203

64. Anon: 0.203

65. Shahid: 0.203

66. rb: 0.204

67. Anon: 0.204

68. Katie T: 0.204

69. Alan Williams: 0.204

70. Matt T: 0.204

71. TangerineSushi : 0.205

72. Wattsgoingon: 0.205

73. GBRChrisA: 0.205

74. alric8: 0.205

75. Anon: 0.206

76. Anon: 0.206

77. Anon: 0.206

78. Anon: 0.207

79. Oscar Bicket: 0.207

80. DrPauli: 0.207

81. Jo Pellereau : 0.207

82. Anon: 0.208

83. Coco: 0.208

84. PaulGG: 0.208

85. PV2005: 0.208

86. Anon: 0.209

87. Henry: 0.210

88. Rajasaur: 0.210

89. Ryan Turner: 0.210

90. Nick: 0.210

91. Anon: 0.210

92. Anon: 0.211

93. Daniel Cremin : 0.211

94. Paul Kearney: 0.211

95. Phil Newton: 0.211

96. Whazell: 0.211

97. Max Rothbarth: 0.211

98. Sam M : 0.213

99. Diaboth: 0.213

100. Robbo S: 0.213

101. Pooch: 0.213

102. Will McLean: 0.213

103. Ben R: 0.214

104. Anon: 0.214

105. Reabank: 0.214

106. John Purvis: 0.214

107. Gommo: 0.214

108. MusterTheSquirrels: 0.214

109. Tino: 0.215

110. Shoeshine: 0.215

111. JPod: 0.215

112. Anon: 0.215

113. Hugo Gye: 0.215

114. Mike Sharp: 0.215

115. Neil R: 0.215

116. Andrew Brook: 0.216

117. Sam R: 0.216

118. A Metcalfe: 0.216

119. Simon Pearce: 0.216

120. Alex Baynham: 0.216

121. Sean S C: 0.217

122. Anon: 0.217

123. Mahoney: 0.217

124. DParks: 0.218

125. NMacck: 0.218

126. Sue Julians: 0.219

127. Nick L: 0.219

128. Alistair MacDonald: 0.219

129. Alice W: 0.219

130. Andrew P Smith: 0.219

131. Anon: 0.220

132. Anon: 0.220

133. Anon: 0.220

134. CleptoMarcus: 0.221

135. Alan Stokes: 0.221

136. RolandW: 0.222

137. Moo: 0.222

138. Anon: 0.223

139. Nic: 0.223

140. literally_Chad: 0.223

141. Ollie RT: 0.224

142. OscarDaBosca : 0.225

143. David: 0.225

144. Bollieboy: 0.225

145. atreic : 0.225

146. Shiv5468: 0.225

147. The Absent Minded Professor: 0.226

148. Cannygeezer: 0.226

149. Daniel: 0.226

150. Timothy Lamb: 0.226

151. Rob C: 0.227

152. Brian: 0.227

153. AlexCM: 0.227

154. RV81: 0.227

155. Aldridge: 0.227

156. Anon: 0.227

157. Josh: 0.227

158. Anon: 0.228

159. Steve Broach: 0.228

160. 0UR0-13: 0.228

161. Anon: 0.229

162. Anon: 0.229

163. Greenfield Gibbons: 0.229

164. wisewizard: 0.229

165. markyboy: 0.229

166. Fcfmc: 0.229

167. ServiceKid74: 0.231

168. Adele Barnett-Ward: 0.231

169. Saddler: 0.231

170. Anon: 0.231

171. Em: 0.231

172. SpongeBrainBob1: 0.232

173. Anon: 0.232

174. Nick Hart: 0.232

175. alan chaplin: 0.232

176. deeharvey: 0.233

177. Ben V: 0.233

178. Ofjmx on Twitter: 0.233

179. Josh: 0.234

180. Joseph: 0.234

181. Matt: 0.234

182. Pmr : 0.234

183. Ian holmes : 0.234

184. BenA : 0.235

185. Jenna Cunningham: 0.235

186. Stephen: 0.235

187. Anon: 0.235

188. Rachel M: 0.235

189. Arj Singh: 0.235

190. RudyH: 0.235

191. Gasman: 0.236

192. Gatehouse123: 0.236

193. John Adams: 0.236

194. Matt N: 0.236

195. Boaly66: 0.237

196. Anon: 0.237

197. Clearlier: 0.238

198. Michael Barge: 0.239

199. Abhishek S: 0.239

200. Mr: 0.239

201. lord satan: 0.239

202. Anon: 0.240

203. Laura Spence: 0.240

204. From the Right Side: 0.240

205. Anon: 0.240

206. JutC.Predict: 0.241

207. siphuncle: 0.241

208. Anon: 0.241

209. MirandaJ: 0.241

210. Sandy Fyfe : 0.242

211. Dan S R: 0.242

212. Hugh Jones: 0.242

213. Phil C: 0.242

214. Ben P: 0.242

215. Steve Rowse: 0.243

216. wallaceme: 0.243

217. Anon: 0.243

218. Zoe Jardiniere: 0.243

219. Andrew21: 0.244

220. Tora Bora: 0.244

221. Anon: 0.244

222. Anon: 0.244

223. Anon: 0.244

224. A Garrido: 0.245

225. Niko: 0.245

226. Anon: 0.245

227. Gannister : 0.246

228. Rachael: 0.246

229. Anon: 0.247

230. Anon: 0.247

231. Anouschka Rajah: 0.247

232. derry: 0.248

233. Majician2000: 0.248

234. Austin platt: 0.249

235. Anon: 0.249

236. Pete Ford: 0.249

237. Rhiannon : 0.250

238. 2029d: 0.250

[+136 other entries]

Appendix: Brier Scores and the Wisdom of Crowds

So what’s a Brier score then, anyway?

A Brier score is a way of scoring predictions that rewards both getting the prediction right and being accurate about how confident you were about that prediction. It has the advantage that it is hard to game: if you genuinely think the probability of something happening is 80%, you should guess 80%.

The Brier score is calculated as the average mean square error across all the questions. For example, if you predicted something had an 80% chance of happening, if it does happen your score for that question will be 0.04 (i.e. (1 - 0.8)2) and if it doesn’t happen your score will be 0.64 (i.e. 0.82). The score for every question is added up and then divided by the number of questions, giving a score between 0 and 1, where lower scores are better. You can therefore improve your Brier score both by getting more things right and by being more realistic (’better correlated’) about how likely you are to be right, because you accrue a better score by getting something you were only weakly confident about wrong, than by getting something you were very confident about wrong.

Putting 50% for a probability guarantees a score for that question of 0.25. In this prediction contest I therefore awarded 0.25 for any question that participants skipped, as this was equivalent to them saying they thought it was as likely as not to happen. Someone could ensure they got no higher than 0.25 overall by putting 50% for every question; in theory, therefore, if people are correct about their confidence levels, no-one should get a higher Brier score than 0.25 - though in practice, that’s not the case.

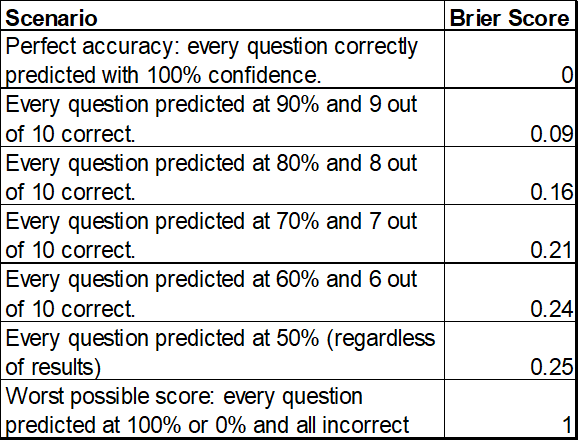

I find Brier scores slightly counter-intuitive, so below are set out some hypothetical scenarios and the associated scores:

What about the Wisdom of Crowds?

There’s a theory which says that if you average predictions, the average will be better than most individual sets of predictions. Different people have different information; positive and negative random errors cancel out, and so on. The Wisdom of Crowds score is found by taking the mean of everyone’s forecast for each question and then scoring those means as if they were an entry in its own right.

When we look at the Wisdom of Crowds score, it had a Brier score of 0.188 - or better than 93% of contestants! Which is pretty good! It means that in most cases you are much better off trusting the average than any single individual (including many people who are specialists in the field). On the other hand, there are a few people who did significantly better - including some people who’ve done so multiple years runnning - which also shows it is very much possible to do better in predicting things than just averaging our guesses. It is, however, something which does not automatically come with domain knowledge (though it can be helped by it), but rather is its own skill.

There is the usual detailed section on Brier Scoring and the Wisdom of Crowds in an appendix at the end of this piece.

Albeit in a smaller field.

Though one can never tell; maybe the questions were easier.

Any women who are unhappy about this, you know what to do: encourage your female friends to enter next year to defend the honour of your sex!

Though was one place ahead of last year’s winner!

And placing too high a consideration on the fact that some elections were postponed, meaning that fewer seats were being contested.

Though even with hindsight, think my 90% prediction was reasonable at the time - 1 in 10 chances do happen.

Or potentially you have forgotten the alias under which you entered!

Thank you.

I think the AI entry did 0.298 so humans win :-) Might try it again this year

who would be wear in the polls - should be where.

sadly, this [year] you did not do better than chance

I would be fascinated to know whether the geometric mean wisdom of the crowds outperformed the arithmetic mean this year (and also previous years).